info@crossroadelf.com

Welcome to Crossroad Elf

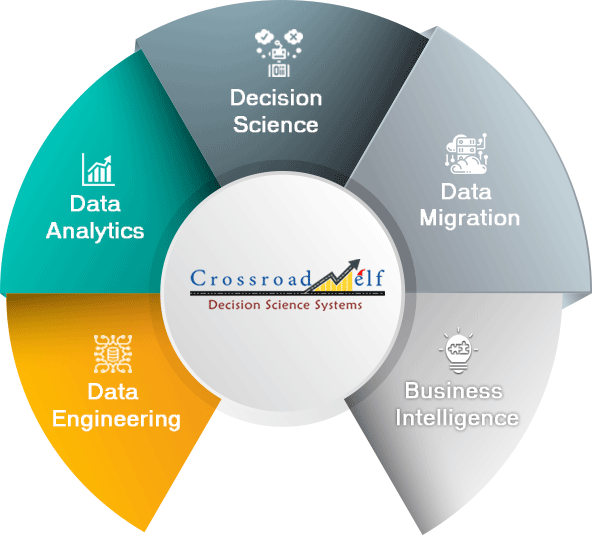

Staffing & Consultancy Services, Data Analytics, Data Engineering and Decision Science Systems.

Crossroad Elf is a promising Staffing and IT Services Company headquartered in Bangalore, India. We are one among the innovative and fast-growing Data Analytics, Data Engineering, and Data Science Companies in Bangalore.

Our Consultancy Services portfolio includes Manpower Consulting, Business Consulting, Staff Augmentation, and Contract-to-hire Services.

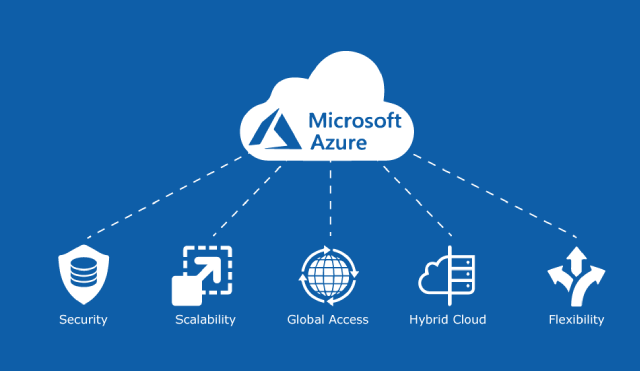

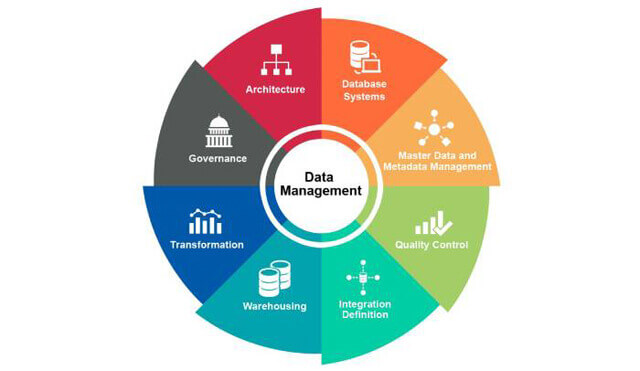

With skilled experts on-board, we predominantly focus on Data Analytics, Data Engineering, AWS and Azure Data Engineering, Big Data, Machine Learning, Data Integration, and AI solutions. We bring creative and strategic skills to all the projects we undertake by harnessing the full power of data for actionable insights that will help you have the competitive edge in the current market scenario.

We are an Integrated Data Solutions Provider with competitive Data-Driven Strategies for Industries. As one of the advanced Data Analytics, Data Science and Data Engineering Companies in Bangalore, our primary objective is to provide turnkey solutions to customers who are keen on succeeding and achieving sustainable growth in the modern-day business scenario. We bring together design, technology and innovation, to provide solutions tailored to suit customer needs and demands. In the process, we create unique experiences and key moments of interaction with a focus on the client’s end goals.

Our services portfolio ranges wide, covering specializations such as Data Engineering on Cloud Platforms such as AWS Data Engineering and Azure Data Engineering, Conventional Data Analytics & Business Intelligence Solutions, Data Mining Consulting, Data Migration Solutions, Data Analysis Services, Data Warehousing Services and Data Science Solutions. With agile and adaptive project management approach, we create and deliver cost-effective strategies and solutions within the client’s budget and timelines. The result: Revenue, Profit and Growth!

Report Factory

Report Factory or Insights Service is a centralized service that provides ongoing reporting needs of business in a very consistent, with timely and cost effective manner. Businesses are in instant need of actionable intelligence, from enterprise data to ensure that the services are aligned to customer needs. Depleting profit margins, which drive companies for alternative resolutions to offload the mundane task of report generation from multiple resources and then deploy them to transformative initiatives that solely focus on creating customer value.

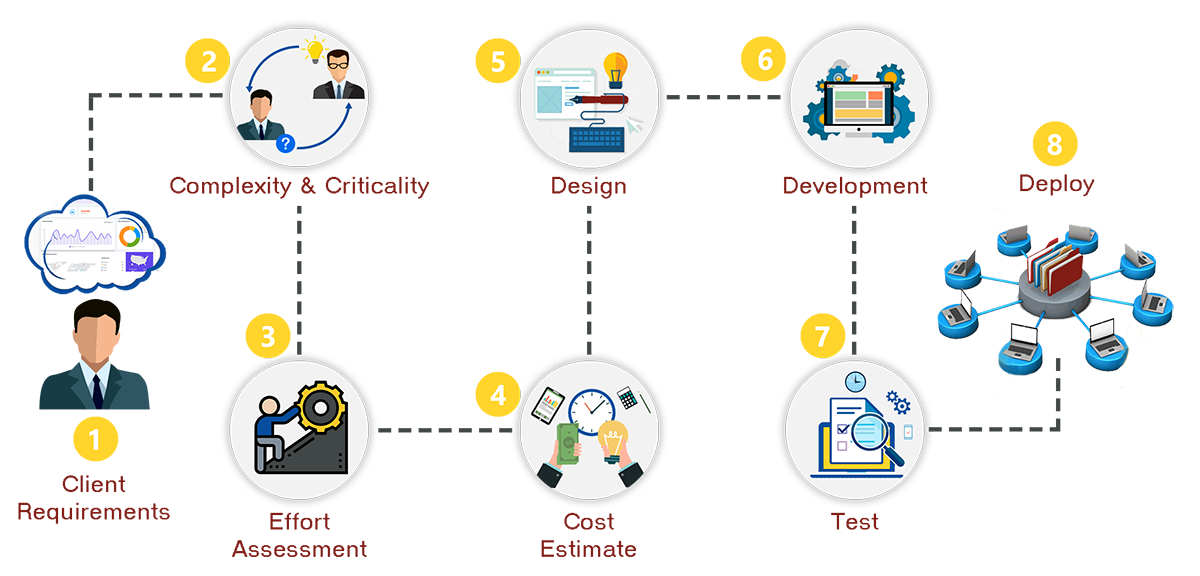

Our Methodology

-

Requirements Elicitation

-

Design

-

Engineer

-

Deploy

-

Train & Transfer

What Our Customers Say

News and Highlights

Our 3rd year anniversary celebration.

Volunteerism is associated with feelings of kindness and positivity! On Feb 7th, 2020, we celebrated our Company’s 3rd year anniversary at a children’s orphanage in Bangalore, India. We met some of the nicest souls on earth who cherish life to the fullest extent and understand the true value of life and relationship! Our team had fun engaging with the children and playing games with them. The event included cake-cutting, sharing food and gifting the children booklets. Spending quality time with the underprivileged allows you to step out of your usual world and reflect on your life.

Recognition

"Crossroad Elf DSS Pvt. Ltd., endeavours to bring design, technology and innovation under one roof and device productive solutions best fitting to the needs of its clients."

Data analytics consultants are the newest arbitrator of all organizations. They approach them to draw constructive solutions based on the exploration and scientific scrutinization of valuable data. These analyses are helping businesses make important and conclusive decisions, beneficial for their furtherance.

Crossroad Elf DSS Pvt. Ltd, an IT Services company in india is rendering compelling Data Analytics and Data Engineering, driving growth and development of its clients. This peerless set-up is the brainchild of Raju Chellaton and Punyesh Lakshman Murthy.